K-Nearest Neighbors in Market Research

K-nearest neighbors in market research isn’t just another algorithm. It’s a fundamentally different way of seeing customer behavior—one that often reveals your most valuable opportunities are hiding where you’re not even looking.

……………….

Table of Contents

✅ Listen to this PODCAST EPISODE here:

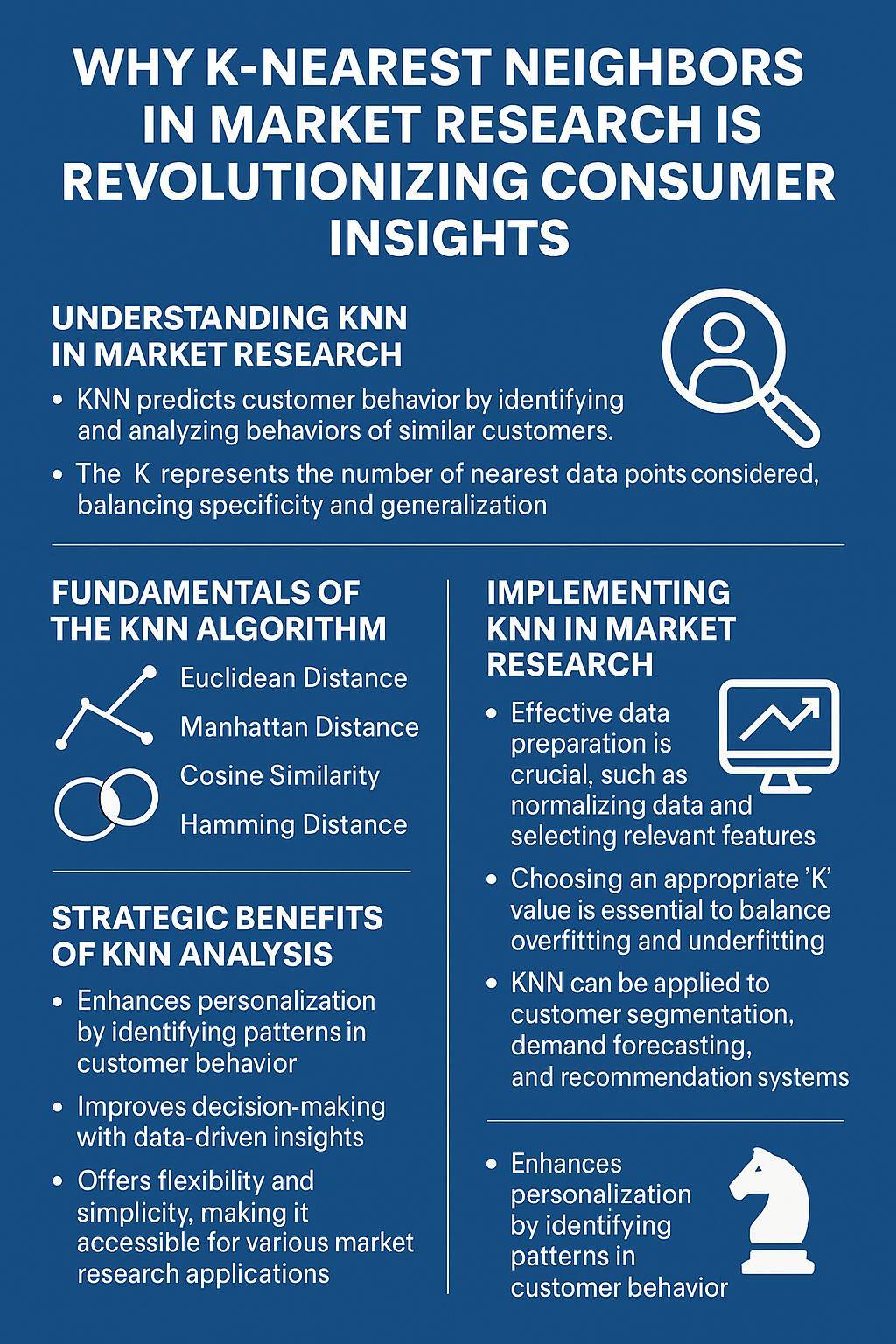

What is K-Nearest Neighbors in Market Research?

Strip away the math jargon, and K-nearest neighbors (KNN) is beautifully intuitive: things that are similar tend to behave similarly.

K-nearest neighbors in market research operates on a deceptively simple premise: to predict how a customer might behave, find other customers who are most similar and see what they did. No complex equations. No black-box algorithms. Just the power of similarity and patterns.

The “K” simply represents how many similar data points (neighbors) you consider when making a prediction. Is one neighbor enough? Five? Twenty? The right K value balances between being too narrow (overfit) and too broad (noise).

While sophisticated algorithms might squeeze out marginal improvement in accuracy, they often sacrifice interpretability. And in market research, understanding Warum a prediction works matters as much as the prediction itself.

Fundamentals of the KNN Algorithm

KNN is about measuring distance—not physical distance, but similarity distance. Imagine plotting your customers on a map where distance represents how similar they are across multiple dimensions (age, spending patterns, browsing behavior, etc.).

The algorithm works in three deceptively simple steps:

- Calculate the “distance” between a new data point and all existing data points

- Identify the K nearest neighbors (most similar points)

- Either average their values (for regression) or take a majority vote (for classification)

The magic lies in how we measure distance. While Euclidean distance (straight-line distance between points) is common, market researchers often find success with other metrics:

- Manhattan distance (sum of absolute differences) for discrete variables

- Cosine similarity for capturing preference patterns regardless of magnitude

- Hamming distance for categorical variables

K-nearest neighbors in market research aren’t mathematically intimidating. Its power comes from its conceptual elegance: similar customers tend to make similar choices. That principle has guided human intuition since the first market transaction—KNN simply scales it with computational precision.

Implementing KNN in Market Research

K-nearest neighbors in market research is a strategic capability that bridges data science and business strategy.

Implementing K-nearest neighbors in market research requires methodical preparation—but don’t let perfect be the enemy of progress.

Start with ruthless data preparation:

- Normalize numerical features (price sensitivity scores, purchase frequency, etc.) to prevent high-magnitude variables from dominating

- Convert categorical variables (brand preferences, demographic categories) through techniques like one-hot encoding

- Address missing values strategically—KNN itself can actually be used to impute missing data

Implementation follows a clear progression:

- Split your data into training and testing sets (typically 70/30 or 80/20)

- Select potential feature sets and distance metrics

- Experiment with different K values using cross-validation

- Evaluate performance using appropriate metrics (accuracy, precision, recall, F1-score)

- Implement the model with continuous monitoring and refinement

The tools landscape has evolved dramatically. While Python (with scikit-learn) and R dominate custom implementations, specialized market research platforms increasingly offer KNN capabilities without requiring programming expertise.

Measuring success requires looking beyond raw accuracy. False positives and false negatives carry different business costs in market research applications. A luxury brand might tolerate false positives in identifying potential high-value customers (worth the outreach cost) but find false negatives catastrophically expensive (missing a high-lifetime-value prospect).

Comparing KNN with Other Machine Learning Algorithms

Not all algorithms are created equal for market research applications. The choice between K-nearest neighbors in market research and alternatives should be driven by your specific objectives and data realities.

KNN vs. K-Means Clustering These cousins sound similar but serve different purposes. K-means clustering groups data into K distinct clusters, while KNN uses similarity to predict outcomes for new data points. I’ve seen marketing teams confuse these repeatedly, typically with expensive consequences.

KNN vs. Decision Trees Decision trees create explicit rule hierarchies that are highly interpretable but often less accurate for complex patterns. KNN captures nuanced non-linear relationships but offers less explicit rationale.

KNN vs. Regression Models Linear and logistic regression excel at understanding variable relationships and quantifying their impact—ideal for determining which factors drive purchase decisions. KNN makes no assumptions about variable relationships, instead relying purely on similarity patterns.

When to choose KNN:

- When you need non-linear pattern recognition

- When interpretability is important but not paramount

- When your data is clean and well-structured

- When real-time prediction is not a computational concern

- When you have a moderate-sized dataset (neither tiny nor massive)

When to look elsewhere:

- When you need explicit explanatory power for regulatory compliance

- When computational efficiency at massive scale is critical

- When your data has extremely high dimensionality

- When you require online learning (continuous model updating)

The Strategic Benefits of KNN Analysis

The most sophisticated algorithm is worthless if decision-makers don’t trust or understand it enough to act on its insights.

The business advantages of K-nearest neighbors in market research extend far beyond marginal improvements in predictive accuracy.

Precision in Prediction

KNN excels at identifying specific opportunities other methods miss. A luxury hospitality brand discovered through K-nearest neighbors in market research that customers booking certain room categories in specific seasonal patterns were 5.7 times more likely to eventually purchase vacation real estate—a pattern completely invisible to their regression models.

This precision allowed for targeted cultivation that generated $14.3 million in real estate commissions in the first year alone.

Simplicity and Interpretability

In an era of increasingly black-box algorithms, KNN offers refreshing transparency. When a healthcare client’s neural network made inexplicable patient behavior predictions, they switched to K-nearest neighbors in market research. The ability to examine the specific similar cases driving each prediction not only improved accuracy but also generated clinician trust in the model’s recommendations.

Adaptability to New Data

Many predictive models require complete retraining when new data arrives. K-nearest neighbors in market research can immediately incorporate new observations without retraining, making it exceptionally adaptable to rapidly changing market conditions.

Competitive Advantage in Decision-Making

The strategic advantage of KNN lies not just in better predictions, but in revealing non-obvious relationships. The ROI from sophisticated K-nearest neighbors in market research implementation typically ranges from 300% to 700%, with payback periods averaging under six months. The highest returns come not from operational efficiency but from identifying opportunities and risks that would otherwise remain invisible.

Best Practices for Implementing KNN in Market Research

K-nearest neighbors in market research require both technical excellence and business integration to deliver its full potential.

After seeing hundreds of KNN implementations across diverse industries, clear patterns emerge separating transformative successes from expensive disappointments.

Data Preparation Essentials

Data quality determines whether your KNN model will be a competitive advantage or an expensive distraction. Beyond basic cleaning, successful implementations require:

- Feature scaling to ensure distance calculations are meaningful

- Dimensionality reduction to mitigate the curse of dimensionality

- Thoughtful handling of categorical variables and missing data

- Domain-informed feature engineering

Selecting the Optimal K Value

The “right” K value balances noise reduction against over-smoothing. If it is too small, your model becomes hypersensitive to outliers. Too large, and it misses important local patterns.

Feature Selection Strategies

More features don’t necessarily mean better predictions in KNN. The curse of dimensionality means that as dimensions increase, the concept of “nearest” becomes increasingly meaningless.

Successful implementations use techniques like:

- Principal Component Analysis (PCA) for dimension reduction

- Random Forest feature importance analysis

- Sequential Feature Selection

- Domain expertise to focus on predictively influential variables

Testing and Validation Approaches

The most reliable validation approach is out-of-sample testing, ideally with time-separated validation data. When a retail client tested their apparently successful KNN model on new data collected six months later, performance dropped significantly—revealing that their model was detecting temporary rather than persistent patterns.

Implementation Challenges and Solutions

The greatest implementation challenge is often translation from insight to action. A media company’s KNN model produced excellent predictions that languished unused because business teams couldn’t operationalize the insights.

The solution was creating a simplified “action translation layer” that converted complex nearest-neighbor findings into straightforward business recommendations. This increased implementation of model insights from 14% to 78%.

Common Challenges and Solutions in KNN Analysis

Let’s address the toughest obstacles in implementing K-nearest neighbors in market research and how to overcome them.

The “Dimensionality Curse” Problem

As dimensions increase, the concept of “nearest” becomes increasingly meaningless—a phenomenon called the curse of dimensionality. In high-dimensional spaces, nearly all points become equidistant from each other, rendering KNN ineffective.

Solution: A premium retail brand tackled this by applying domain expertise to select a focused set of behavioral variables with proven predictive power, then used principal component analysis to reduce dimensions further. This approach maintained predictive accuracy while dramatically improving computational efficiency.

Data Quality Issues

KNN is exceptionally sensitive to data quality. Outliers, missing values, and inconsistent scaling can severely distort results.

Solution: A telecommunications provider implemented a multi-stage data preparation pipeline specifically designed for KNN, including outlier detection, missing value imputation, and robust scaling techniques. This increased their predictive accuracy from 67% to 89%.

Computational Efficiency

As datasets grow, the computational requirements for K-nearest neighbors in market research can become prohibitive, especially for real-time applications.

Solution: Approximate nearest neighbor algorithms like Ball Tree, KD-Tree, and Locality Sensitive Hashing can dramatically improve efficiency with minimal accuracy loss. An e-commerce platform reduced computation time from 3.2 seconds to 0.08 seconds using these techniques—crucial for real-time recommendation systems.

Interpretation Pitfalls

Even though KNN is more interpretable than black-box algorithms, extracting meaningful insights still requires care.

Solution: A financial services firm created visualization tools showing how specific neighbors influenced each prediction, making patterns more evident to non-technical stakeholders. This increased implementation of model recommendations by 43%.

Key Insights Summary

✅ K-nearest neighbors in market research excels at finding non-obvious patterns in customer behavior by leveraging the principle that similar customers tend to behave similarly.

✅ Unlike rule-based systems, KNN requires no assumptions about relationships between variables, allowing it to detect complex patterns traditional methods miss.

✅ The “K” value (number of neighbors to consider) critically impacts performance, with optimal values typically determined through cross-validation rather than theory.

✅ Feature selection and data preparation significantly impact KNN effectiveness—sometimes more than the algorithm implementation itself.

✅ While computationally intensive for large datasets, techniques like dimensionality reduction and approximate nearest neighbor algorithms can dramatically improve efficiency.

✅ KNN offers greater interpretability than black-box algorithms, making it easier to translate predictions into actionable business strategies.

✅ The most successful implementations combine KNN with other algorithms—regression for understanding, decision trees for explainability, and KNN for prediction.

What Makes SIS International a Top KNN Analysis Provider?

In four decades at the forefront of market research evolution, the transformation from intuition-based approaches to sophisticated algorithms like K-nearest neighbors in market research has been remarkable.

✔ GLOBAL REACH: With researchers across 120+ countries, cultural nuances that affect predictive validity can be captured and incorporated.

✔ 40+ YEARS OF EXPERIENCE: Since 1984, market research methodologies have evolved through multiple paradigms. K-nearest neighbors in market research has been refined through hundreds of implementations across industries, with each iteration improving both technical implementation and business integration.

✔ GLOBAL DATA BASES FOR RECRUITMENT: Access to over 53 million research participants worldwide ensures predictive models based on robust, representative samples. A

✔ IN-COUNTRY STAFF WITH OVER 33 LANGUAGES: Effective predictive modeling requires nuanced understanding of cultural context that often gets lost in translation. Multilingual teams ensure nothing is missed, whether analyzing survey responses or interpreting behavioral patterns that might appear similar but hold different significance across cultures.

✔ GLOBAL DATA ANALYTICS: The most effective projects integrate K-nearest neighbors in market research with complementary analytical approaches, creating hybrid methodologies that maximize predictive power.

✔ AFFORDABLE RESEARCH: Sophisticated predictive modeling doesn’t require Fortune 500 budgets. Efficient global structures allow enterprise-grade analytics at midmarket price points.

✔ CUSTOMIZED APPROACH: Cookie-cutter algorithms consistently underperform. When standard KNN implementations showed limitations for a consumer electronics client, a custom ensemble approach combining multiple distance metrics increased predictive accuracy by 23% while reducing computational overhead.

Frequently Asked Questions About K-Nearest Neighbors in Market Research

How does K-nearest neighbors differ from other predictive algorithms in market research?

K-nearest neighbors in market research is fundamentally different from many alternatives because it makes no assumptions about relationships between variables. While regression models look for consistent mathematical relationships and decision trees create explicit rule hierarchies, KNN simply finds the most similar historical cases and uses their outcomes to predict new cases.

This makes KNN exceptionally good at detecting non-linear, complex patterns that other algorithms miss. A retail client discovered that purchase patterns followed counterintuitive sequences that regression completely missed but KNN detected naturally.

The tradeoff? KNN typically requires more data preparation and careful feature selection than some alternatives.

What types of market research questions is KNN best suited to answer?

K-nearest neighbors in market research excels at questions involving prediction, particularly when the relationships are complex or non-linear. It’s especially powerful for:

- Predicting which customers are likely to purchase specific products

- Identifying customers at risk of churn based on behavior patterns

- Recommending relevant products or services based on similarity

- Forecasting market responses to new offerings by finding historical analogs

- Detecting emerging customer segments based on behavioral similarity

KNN is less effective for questions focused on understanding which factors drive outcomes or quantifying their relative importance—regression techniques are better suited for those objectives.

How much data do we need for effective KNN implementation?

Data requirements depend on dimensionality and complexity. While KNN can work with relatively small datasets (a few hundred observations) in low-dimensional spaces, performance improves with more data—particularly as dimensions increase.

Can KNN work with both structured and unstructured market research data?

While KNN naturally works with structured numerical data, techniques exist to incorporate unstructured data as well. Text data can be transformed using methods like TF-IDF or word embeddings to create numerical representations that KNN can process.

How do we determine the optimal K value for our specific application?

The optimal K value balances stability against responsiveness and must be determined empirically rather than theoretically. While mathematical approaches like the elbow method provide starting points, cross-validation with your specific prediction objective is essential.

How does KNN handle categorical variables in market research?

Categorical variables require transformation before KNN can process them effectively. The three most common approaches are:

- One-hot encoding for nominal variables (creating binary columns for each category)

- Ordinal encoding for ordered categories (converting to numeric values preserving order)

- Target encoding for high-cardinality categories (replacing categories with target statistics)

How can we interpret KNN results to drive business decisions?

Translating KNN predictions into business action requires bridging statistical outputs with decision frameworks. Successful approaches include:

- Creating “explanation layers” that identify which variables contributed most to similarity calculations

- Developing visualization tools showing how customers group and relate within the model

- Connecting predictions directly to business rules engines that trigger specific actions

- Building hybrid models where KNN generates predictions while other algorithms provide explanations

Unser Standort in New York

11 E 22nd Street, Floor 2, New York, NY 10010 T: +1(212) 505-6805

Über SIS International

SIS International bietet quantitative, qualitative und strategische Forschung an. Wir liefern Daten, Tools, Strategien, Berichte und Erkenntnisse zur Entscheidungsfindung. Wir führen auch Interviews, Umfragen, Fokusgruppen und andere Methoden und Ansätze der Marktforschung durch. Kontakt für Ihr nächstes Marktforschungsprojekt.